完成H20服务器部署及重启测试 (#51)

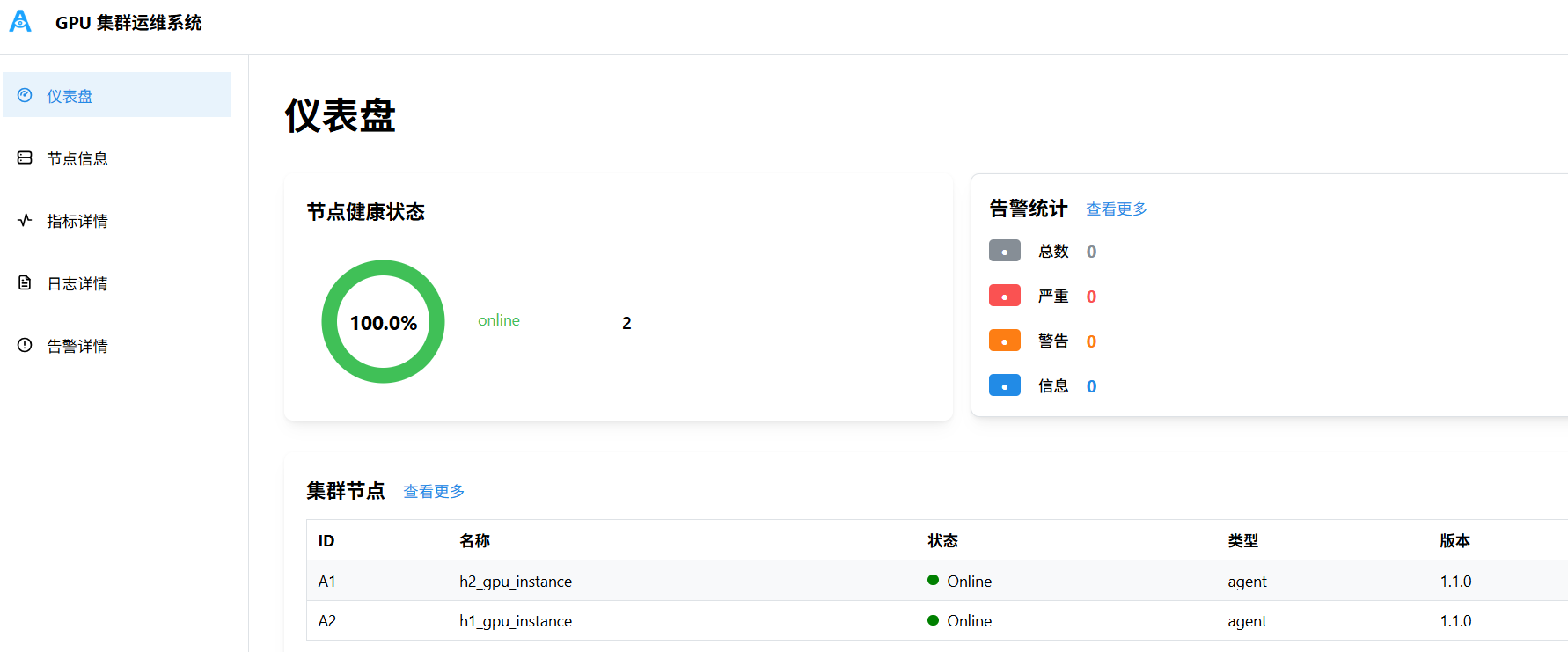

当前部署情况 - h1: 部署server & client - h2: 部署client - 部署2025-11-25 - 部署目录: /home2/argus/server , /home2/argus/client - 部署使用账号:argus 网络拓扑: - h1 作为docker swarm manager - h2 作为worker加入docker swarm - docker swarm 上创建overlay network 访问方式: - 通过ssh到h1服务器,端口转发 20006-20011 端口到笔记本本地; - 门户网址:http://localhost:20006/dashboard 部署截图:    注意事项: - server各容器使用域名作为overlay network上alias别名,实现域名访问,当前版本禁用bind作为域名解析,原因是容器重启后IP变化场景bind机制复杂且不稳定。 - client 构建是内置安装包,容器启动时执行安装流程,后续重启容器跳过安装步骤。 - UID/GID:部署使用 argus账号 uid=2133, gid=2015。 Reviewed-on: #51 Reviewed-by: sundapeng <sundp@mail.zgclab.edu.cn> Reviewed-by: xuxt <xuxt@zgclab.edu.cn> Reviewed-by: huhy <husteryezi@163.com>

This commit is contained in:

parent

b6da5bc8b8

commit

34cb239bf4

150

build/README.md

Normal file

150

build/README.md

Normal file

@ -0,0 +1,150 @@

|

|||||||

|

# ARGUS 统一构建脚本使用说明(build/build_images.sh)

|

||||||

|

|

||||||

|

本目录提供单一入口脚本 `build/build_images.sh`,覆盖常见三类场景:

|

||||||

|

- 系统集成测试(src/sys/tests)

|

||||||

|

- Swarm 系统集成测试(src/sys/swarm_tests)

|

||||||

|

- 构建离线安装包(deployment_new:Server/Client‑GPU)

|

||||||

|

|

||||||

|

文档还说明 UID/GID 取值规则、镜像 tag 策略、常用参数与重试机制。

|

||||||

|

|

||||||

|

## 环境前置

|

||||||

|

- Docker Engine ≥ 20.10(建议 ≥ 23.x/24.x)

|

||||||

|

- Docker Compose v2(`docker compose` 子命令)

|

||||||

|

- 可选:内网构建镜像源(`--intranet`)

|

||||||

|

|

||||||

|

## UID/GID 规则(用于容器内用户/卷属主)

|

||||||

|

- 非 pkg 构建(core/master/metric/web/alert/sys/gpu_bundle/cpu_bundle):

|

||||||

|

- 读取 `configs/build_user.local.conf` → `configs/build_user.conf`;

|

||||||

|

- 可被环境变量覆盖:`ARGUS_BUILD_UID`、`ARGUS_BUILD_GID`;

|

||||||

|

- pkg 构建(`--only server_pkg`、`--only client_pkg`):

|

||||||

|

- 读取 `configs/build_user.pkg.conf`(优先)→ `build_user.local.conf` → `build_user.conf`;

|

||||||

|

- 可被环境变量覆盖;

|

||||||

|

- CPU bundle 明确走“非 pkg”链(不读取 `build_user.pkg.conf`)。

|

||||||

|

- 说明:仅依赖 UID/GID 的 Docker 层会因参数变动而自动重建,不同构建剖面不会“打错包”。

|

||||||

|

|

||||||

|

## 镜像 tag 策略

|

||||||

|

- 非 pkg 构建:默认输出 `:latest`。

|

||||||

|

- `--only server_pkg`:所有镜像直接输出为 `:<VERSION>`(不覆盖 `:latest`)。

|

||||||

|

- `--only client_pkg`:GPU bundle 仅输出 `:<VERSION>`(不覆盖 `:latest`)。

|

||||||

|

- `--only cpu_bundle`:默认仅输出 `:<VERSION>`;可加 `--tag-latest` 同时打 `:latest` 以兼容 swarm_tests 默认 compose。

|

||||||

|

|

||||||

|

## 不加 --only 的默认构建目标

|

||||||

|

不指定 `--only` 时,脚本会构建“基础镜像集合”(不含 bundle 与安装包):

|

||||||

|

- core:`argus-elasticsearch:latest`、`argus-kibana:latest`、`argus-bind9:latest`

|

||||||

|

- master:`argus-master:latest`(非 offline)

|

||||||

|

- metric:`argus-metric-ftp:latest`、`argus-metric-prometheus:latest`、`argus-metric-grafana:latest`

|

||||||

|

- web:`argus-web-frontend:latest`、`argus-web-proxy:latest`

|

||||||

|

- alert:`argus-alertmanager:latest`

|

||||||

|

- sys:`argus-sys-node:latest`、`argus-sys-metric-test-node:latest`、`argus-sys-metric-test-gpu-node:latest`

|

||||||

|

|

||||||

|

说明:默认 tag 为 `:latest`;UID/GID 走“非 pkg”链(`build_user.local.conf → build_user.conf`,可被环境变量覆盖)。

|

||||||

|

|

||||||

|

## 通用参数

|

||||||

|

- `--intranet`:使用内网构建参数(各 Dockerfile 中按需启用)。

|

||||||

|

- `--no-cache`:禁用 Docker 层缓存。

|

||||||

|

- `--only <list>`:逗号分隔目标,例:`--only core,master,metric,web,alert`。

|

||||||

|

- `--version YYMMDD`:bundle/pkg 的日期标签(必填于 cpu_bundle/gpu_bundle/server_pkg/client_pkg)。

|

||||||

|

- `--client-semver X.Y.Z`:all‑in‑one‑full 客户端语义化版本(可选)。

|

||||||

|

- `--cuda VER`:GPU bundle CUDA 基镜版本(默认 12.2.2)。

|

||||||

|

- `--tag-latest`:CPU bundle 构建时同时打 `:latest`。

|

||||||

|

|

||||||

|

## 自动重试

|

||||||

|

- 构建单镜像失败会自动重试(默认 3 次,间隔 5s)。

|

||||||

|

- 最后一次自动使用 `DOCKER_BUILDKIT=0` 再试,缓解 “failed to receive status: context canceled”。

|

||||||

|

- 可调:`ARGUS_BUILD_RETRIES`、`ARGUS_BUILD_RETRY_DELAY` 环境变量。

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 场景一:系统集成测试(src/sys/tests)

|

||||||

|

构建用于系统级端到端测试的镜像(默认 `:latest`)。

|

||||||

|

|

||||||

|

示例:

|

||||||

|

```

|

||||||

|

# 构建核心与周边

|

||||||

|

./build/build_images.sh --only core,master,metric,web,alert,sys

|

||||||

|

```

|

||||||

|

产出:

|

||||||

|

- 本地镜像:`argus-elasticsearch:latest`、`argus-kibana:latest`、`argus-master:latest`、`argus-metric-ftp:latest`、`argus-metric-prometheus:latest`、`argus-metric-grafana:latest`、`argus-alertmanager:latest`、`argus-web-frontend:latest`、`argus-web-proxy:latest`、`argus-sys-node:latest` 等。

|

||||||

|

|

||||||

|

说明:

|

||||||

|

- UID/GID 读取 `build_user.local.conf → build_user.conf`(或环境变量覆盖)。

|

||||||

|

- sys/tests 的执行见 `src/sys/tests/README.md`。

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 场景二:Swarm 系统集成测试(src/sys/swarm_tests)

|

||||||

|

需要服务端镜像 + CPU 节点 bundle 镜像。

|

||||||

|

|

||||||

|

步骤:

|

||||||

|

1) 构建服务端镜像(默认 `:latest`)

|

||||||

|

```

|

||||||

|

./build/build_images.sh --only core,master,metric,web,alert

|

||||||

|

```

|

||||||

|

2) 构建 CPU bundle(直接 FROM ubuntu:22.04)

|

||||||

|

```

|

||||||

|

# 仅版本 tag 输出

|

||||||

|

./build/build_images.sh --only cpu_bundle --version 20251114

|

||||||

|

# 若要兼容 swarm_tests 默认 latest:

|

||||||

|

./build/build_images.sh --only cpu_bundle --version 20251114 --tag-latest

|

||||||

|

```

|

||||||

|

3) 运行 Swarm 测试

|

||||||

|

```

|

||||||

|

cd src/sys/swarm_tests

|

||||||

|

# 如未打 latest,可先指定:

|

||||||

|

export NODE_BUNDLE_IMAGE_TAG=argus-sys-metric-test-node-bundle:20251114

|

||||||

|

./scripts/01_server_up.sh

|

||||||

|

./scripts/02_wait_ready.sh

|

||||||

|

./scripts/03_nodes_up.sh

|

||||||

|

./scripts/04_metric_verify.sh # 验证 Prometheus/Grafana/nodes.json 与日志通路

|

||||||

|

./scripts/99_down.sh # 结束

|

||||||

|

```

|

||||||

|

产出:

|

||||||

|

- 本地镜像:`argus-*:latest` 与 `argus-sys-metric-test-node-bundle:20251114`(或 latest)。

|

||||||

|

- `swarm_tests/private-*`:运行态持久化文件。

|

||||||

|

|

||||||

|

说明:

|

||||||

|

- CPU bundle 构建用户走“非 pkg”链(local.conf → conf)。

|

||||||

|

- `04_metric_verify.sh` 已内置 Fluent Bit 启动与配置修正逻辑,偶发未就绪可重跑一次即通过。

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 场景三:构建离线安装包(deployment_new)

|

||||||

|

Server 与 Client‑GPU 安装包均采用“版本直出”,只输出 `:<VERSION>` 标签,不改动 `:latest`。

|

||||||

|

|

||||||

|

1) Server 包

|

||||||

|

```

|

||||||

|

./build/build_images.sh --only server_pkg --version 20251114

|

||||||

|

```

|

||||||

|

产出:

|

||||||

|

- 本地镜像:`argus-<模块>:20251114`(不触碰 latest)。

|

||||||

|

- 安装包:`deployment_new/artifact/server/20251114/` 与 `server_20251114.tar.gz`

|

||||||

|

- 包内包含:逐镜像 tar.gz、compose/.env.example、scripts(config/install/selfcheck/diagnose 等)、docs、manifest/checksums。

|

||||||

|

|

||||||

|

2) Client‑GPU 包

|

||||||

|

```

|

||||||

|

# 同步构建 GPU bundle(仅 :<VERSION>,不触碰 latest),并生成客户端包

|

||||||

|

./build/build_images.sh --only client_pkg --version 20251114 \\

|

||||||

|

--client-semver 1.44.0 --cuda 12.2.2

|

||||||

|

```

|

||||||

|

产出:

|

||||||

|

- 本地镜像:`argus-sys-metric-test-node-bundle-gpu:20251114`

|

||||||

|

- 安装包:`deployment_new/artifact/client_gpu/20251114/` 与 `client_gpu_20251114.tar.gz`

|

||||||

|

- 包内包含:GPU bundle 镜像 tar.gz、busybox.tar、compose/.env.example、scripts(config/install/uninstall)、docs、manifest/checksums。

|

||||||

|

|

||||||

|

说明:

|

||||||

|

- pkg 构建使用 `configs/build_user.pkg.conf` 的 UID/GID(可被环境覆盖)。

|

||||||

|

- 包内 `.env.example` 的 `PKG_VERSION=<VERSION>` 与镜像 tag 严格一致。

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

## 常见问题(FAQ)

|

||||||

|

- 构建报 `failed to receive status: context canceled`?

|

||||||

|

- 已内置单镜像多次重试,最后一次禁用 BuildKit;建议加 `--intranet` 与 `--no-cache` 重试,或 `docker builder prune -f` 后再试。

|

||||||

|

- 先跑非 pkg(latest),再跑 pkg(version)会不会“打错包”?

|

||||||

|

- 不会。涉及 UID/GID 的层因参数变化会重建,其它层按缓存命中复用,最终 pkg 产物的属主与运行账户按 `build_user.pkg.conf` 生效。

|

||||||

|

- swarm_tests 默认拉取 `:latest`,我只构建了 `:<VERSION>` 的 CPU bundle 怎么办?

|

||||||

|

- 在运行前 `export NODE_BUNDLE_IMAGE_TAG=argus-sys-metric-test-node-bundle:<VERSION>`,或在构建时加 `--tag-latest`。

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

如需进一步自动化(例如生成 BUILD_SUMMARY.txt 汇总镜像 digest 与构建参数),可在 pkg 产出阶段追加,我可以按需补齐。

|

||||||

@ -12,7 +12,11 @@ Options:

|

|||||||

--master-offline Build master offline image (requires src/master/offline_wheels.tar.gz)

|

--master-offline Build master offline image (requires src/master/offline_wheels.tar.gz)

|

||||||

--metric Build metric module images (ftp, prometheus, grafana, test nodes)

|

--metric Build metric module images (ftp, prometheus, grafana, test nodes)

|

||||||

--no-cache Build all images without using Docker layer cache

|

--no-cache Build all images without using Docker layer cache

|

||||||

--only LIST Comma-separated targets to build: core,master,metric,web,alert,sys,all

|

--only LIST Comma-separated targets to build: core,master,metric,web,alert,sys,gpu_bundle,cpu_bundle,server_pkg,client_pkg,all

|

||||||

|

--version DATE Date tag used by gpu_bundle/server_pkg/client_pkg (e.g. 20251112)

|

||||||

|

--client-semver X.Y.Z Override client semver used in all-in-one-full artifact (optional)

|

||||||

|

--cuda VER CUDA runtime version for NVIDIA base (default: 12.2.2)

|

||||||

|

--tag-latest Also tag bundle image as :latest (for cpu_bundle only; default off)

|

||||||

-h, --help Show this help message

|

-h, --help Show this help message

|

||||||

|

|

||||||

Examples:

|

Examples:

|

||||||

@ -32,8 +36,20 @@ build_metric=true

|

|||||||

build_web=true

|

build_web=true

|

||||||

build_alert=true

|

build_alert=true

|

||||||

build_sys=true

|

build_sys=true

|

||||||

|

build_gpu_bundle=false

|

||||||

|

build_cpu_bundle=false

|

||||||

|

build_server_pkg=false

|

||||||

|

build_client_pkg=false

|

||||||

|

need_bind_image=true

|

||||||

|

need_metric_ftp=true

|

||||||

no_cache=false

|

no_cache=false

|

||||||

|

|

||||||

|

bundle_date=""

|

||||||

|

client_semver=""

|

||||||

|

cuda_ver="12.2.2"

|

||||||

|

DEFAULT_IMAGE_TAG="latest"

|

||||||

|

tag_latest=false

|

||||||

|

|

||||||

while [[ $# -gt 0 ]]; do

|

while [[ $# -gt 0 ]]; do

|

||||||

case $1 in

|

case $1 in

|

||||||

--intranet)

|

--intranet)

|

||||||

@ -63,7 +79,7 @@ while [[ $# -gt 0 ]]; do

|

|||||||

fi

|

fi

|

||||||

sel="$2"; shift 2

|

sel="$2"; shift 2

|

||||||

# reset all, then enable selected

|

# reset all, then enable selected

|

||||||

build_core=false; build_master=false; build_metric=false; build_web=false; build_alert=false; build_sys=false

|

build_core=false; build_master=false; build_metric=false; build_web=false; build_alert=false; build_sys=false; build_gpu_bundle=false; build_cpu_bundle=false; build_server_pkg=false; build_client_pkg=false

|

||||||

IFS=',' read -ra parts <<< "$sel"

|

IFS=',' read -ra parts <<< "$sel"

|

||||||

for p in "${parts[@]}"; do

|

for p in "${parts[@]}"; do

|

||||||

case "$p" in

|

case "$p" in

|

||||||

@ -73,11 +89,31 @@ while [[ $# -gt 0 ]]; do

|

|||||||

web) build_web=true ;;

|

web) build_web=true ;;

|

||||||

alert) build_alert=true ;;

|

alert) build_alert=true ;;

|

||||||

sys) build_sys=true ;;

|

sys) build_sys=true ;;

|

||||||

|

gpu_bundle) build_gpu_bundle=true ;;

|

||||||

|

cpu_bundle) build_cpu_bundle=true ;;

|

||||||

|

server_pkg) build_server_pkg=true; build_core=true; build_master=true; build_metric=true; build_web=true; build_alert=true ;;

|

||||||

|

client_pkg) build_client_pkg=true ;;

|

||||||

all) build_core=true; build_master=true; build_metric=true; build_web=true; build_alert=true; build_sys=true ;;

|

all) build_core=true; build_master=true; build_metric=true; build_web=true; build_alert=true; build_sys=true ;;

|

||||||

*) echo "Unknown --only target: $p" >&2; exit 1 ;;

|

*) echo "Unknown --only target: $p" >&2; exit 1 ;;

|

||||||

esac

|

esac

|

||||||

done

|

done

|

||||||

;;

|

;;

|

||||||

|

--version)

|

||||||

|

if [[ -z ${2:-} ]]; then echo "--version requires a value like 20251112" >&2; exit 1; fi

|

||||||

|

bundle_date="$2"; shift 2

|

||||||

|

;;

|

||||||

|

--client-semver)

|

||||||

|

if [[ -z ${2:-} ]]; then echo "--client-semver requires a value like 1.43.0" >&2; exit 1; fi

|

||||||

|

client_semver="$2"; shift 2

|

||||||

|

;;

|

||||||

|

--cuda)

|

||||||

|

if [[ -z ${2:-} ]]; then echo "--cuda requires a value like 12.2.2" >&2; exit 1; fi

|

||||||

|

cuda_ver="$2"; shift 2

|

||||||

|

;;

|

||||||

|

--tag-latest)

|

||||||

|

tag_latest=true

|

||||||

|

shift

|

||||||

|

;;

|

||||||

-h|--help)

|

-h|--help)

|

||||||

show_help

|

show_help

|

||||||

exit 0

|

exit 0

|

||||||

@ -90,6 +126,11 @@ while [[ $# -gt 0 ]]; do

|

|||||||

esac

|

esac

|

||||||

done

|

done

|

||||||

|

|

||||||

|

if [[ "$build_server_pkg" == true ]]; then

|

||||||

|

need_bind_image=false

|

||||||

|

need_metric_ftp=false

|

||||||

|

fi

|

||||||

|

|

||||||

root="$(cd "$(dirname "${BASH_SOURCE[0]}")/.." && pwd)"

|

root="$(cd "$(dirname "${BASH_SOURCE[0]}")/.." && pwd)"

|

||||||

. "$root/scripts/common/build_user.sh"

|

. "$root/scripts/common/build_user.sh"

|

||||||

|

|

||||||

@ -101,6 +142,16 @@ fi

|

|||||||

|

|

||||||

cd "$root"

|

cd "$root"

|

||||||

|

|

||||||

|

# Set default image tag policy before building

|

||||||

|

if [[ "$build_server_pkg" == true ]]; then

|

||||||

|

DEFAULT_IMAGE_TAG="${bundle_date:-latest}"

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Select build user profile for pkg vs default

|

||||||

|

if [[ "$build_server_pkg" == true || "$build_client_pkg" == true ]]; then

|

||||||

|

export ARGUS_BUILD_PROFILE=pkg

|

||||||

|

fi

|

||||||

|

|

||||||

load_build_user

|

load_build_user

|

||||||

build_args+=("--build-arg" "ARGUS_BUILD_UID=${ARGUS_BUILD_UID}" "--build-arg" "ARGUS_BUILD_GID=${ARGUS_BUILD_GID}")

|

build_args+=("--build-arg" "ARGUS_BUILD_UID=${ARGUS_BUILD_UID}" "--build-arg" "ARGUS_BUILD_GID=${ARGUS_BUILD_GID}")

|

||||||

|

|

||||||

@ -163,13 +214,31 @@ build_image() {

|

|||||||

echo " Tag: $tag"

|

echo " Tag: $tag"

|

||||||

echo " Context: $context"

|

echo " Context: $context"

|

||||||

|

|

||||||

if docker build "${build_args[@]}" "${extra_args[@]}" -f "$dockerfile_path" -t "$tag" "$context"; then

|

local tries=${ARGUS_BUILD_RETRIES:-3}

|

||||||

echo "✅ $image_name image built successfully"

|

local delay=${ARGUS_BUILD_RETRY_DELAY:-5}

|

||||||

return 0

|

local attempt=1

|

||||||

else

|

while (( attempt <= tries )); do

|

||||||

echo "❌ Failed to build $image_name image"

|

local prefix=""

|

||||||

return 1

|

if (( attempt == tries )); then

|

||||||

fi

|

# final attempt: disable BuildKit to avoid docker/dockerfile front-end pulls

|

||||||

|

prefix="DOCKER_BUILDKIT=0"

|

||||||

|

echo " Attempt ${attempt}/${tries} (fallback: DOCKER_BUILDKIT=0)"

|

||||||

|

else

|

||||||

|

echo " Attempt ${attempt}/${tries}"

|

||||||

|

fi

|

||||||

|

if eval $prefix docker build "${build_args[@]}" "${extra_args[@]}" -f "$dockerfile_path" -t "$tag" "$context"; then

|

||||||

|

echo "✅ $image_name image built successfully"

|

||||||

|

return 0

|

||||||

|

fi

|

||||||

|

echo "⚠️ Build failed for $image_name (attempt ${attempt}/${tries})."

|

||||||

|

if (( attempt < tries )); then

|

||||||

|

echo " Retrying in ${delay}s..."

|

||||||

|

sleep "$delay"

|

||||||

|

fi

|

||||||

|

attempt=$((attempt+1))

|

||||||

|

done

|

||||||

|

echo "❌ Failed to build $image_name image after ${tries} attempts"

|

||||||

|

return 1

|

||||||

}

|

}

|

||||||

|

|

||||||

pull_base_image() {

|

pull_base_image() {

|

||||||

@ -203,27 +272,385 @@ pull_base_image() {

|

|||||||

images_built=()

|

images_built=()

|

||||||

build_failed=false

|

build_failed=false

|

||||||

|

|

||||||

|

build_gpu_bundle_image() {

|

||||||

|

local date_tag="$1" # e.g. 20251112

|

||||||

|

local cuda_ver_local="$2" # e.g. 12.2.2

|

||||||

|

local client_ver="$3" # semver like 1.43.0

|

||||||

|

|

||||||

|

if [[ -z "$date_tag" ]]; then

|

||||||

|

echo "❌ gpu_bundle requires --version YYMMDD (e.g. 20251112)" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

|

||||||

|

# sanitize cuda version (trim trailing dots like '12.2.')

|

||||||

|

while [[ "$cuda_ver_local" == *"." ]]; do cuda_ver_local="${cuda_ver_local%.}"; done

|

||||||

|

|

||||||

|

# Resolve effective CUDA base tag

|

||||||

|

local resolve_cuda_base_tag

|

||||||

|

resolve_cuda_base_tag() {

|

||||||

|

local want="$1" # can be 12, 12.2 or 12.2.2

|

||||||

|

local major minor patch

|

||||||

|

if [[ "$want" =~ ^([0-9]+)\.([0-9]+)\.([0-9]+)$ ]]; then

|

||||||

|

major="${BASH_REMATCH[1]}"; minor="${BASH_REMATCH[2]}"; patch="${BASH_REMATCH[3]}"

|

||||||

|

echo "nvidia/cuda:${major}.${minor}.${patch}-runtime-ubuntu22.04"; return 0

|

||||||

|

elif [[ "$want" =~ ^([0-9]+)\.([0-9]+)$ ]]; then

|

||||||

|

major="${BASH_REMATCH[1]}"; minor="${BASH_REMATCH[2]}"

|

||||||

|

# try to find best local patch for major.minor

|

||||||

|

local best

|

||||||

|

best=$(docker images --format '{{.Repository}}:{{.Tag}}' nvidia/cuda 2>/dev/null | \

|

||||||

|

grep -E "^nvidia/cuda:${major}\.${minor}\\.[0-9]+-runtime-ubuntu22\.04$" | \

|

||||||

|

sed -E 's#^nvidia/cuda:([0-9]+\.[0-9]+\.)([0-9]+)-runtime-ubuntu22\.04$#\1\2#g' | \

|

||||||

|

sort -V | tail -n1 || true)

|

||||||

|

if [[ -n "$best" ]]; then

|

||||||

|

echo "nvidia/cuda:${best}-runtime-ubuntu22.04"; return 0

|

||||||

|

fi

|

||||||

|

# fallback patch if none local

|

||||||

|

echo "nvidia/cuda:${major}.${minor}.2-runtime-ubuntu22.04"; return 0

|

||||||

|

elif [[ "$want" =~ ^([0-9]+)$ ]]; then

|

||||||

|

major="${BASH_REMATCH[1]}"

|

||||||

|

# try to find best local for this major

|

||||||

|

local best

|

||||||

|

best=$(docker images --format '{{.Repository}}:{{.Tag}}' nvidia/cuda 2>/dev/null | \

|

||||||

|

grep -E "^nvidia/cuda:${major}\\.[0-9]+\\.[0-9]+-runtime-ubuntu22\.04$" | \

|

||||||

|

sed -E 's#^nvidia/cuda:([0-9]+\.[0-9]+\.[0-9]+)-runtime-ubuntu22\.04$#\1#g' | \

|

||||||

|

sort -V | tail -n1 || true)

|

||||||

|

if [[ -n "$best" ]]; then

|

||||||

|

echo "nvidia/cuda:${best}-runtime-ubuntu22.04"; return 0

|

||||||

|

fi

|

||||||

|

echo "nvidia/cuda:${major}.2.2-runtime-ubuntu22.04"; return 0

|

||||||

|

else

|

||||||

|

# invalid format, fallback to default

|

||||||

|

echo "nvidia/cuda:12.2.2-runtime-ubuntu22.04"; return 0

|

||||||

|

fi

|

||||||

|

}

|

||||||

|

|

||||||

|

local base_image

|

||||||

|

base_image=$(resolve_cuda_base_tag "$cuda_ver_local")

|

||||||

|

|

||||||

|

echo

|

||||||

|

echo "🔧 Preparing one-click GPU bundle build"

|

||||||

|

echo " CUDA runtime base: ${base_image}"

|

||||||

|

echo " Bundle tag : ${date_tag}"

|

||||||

|

|

||||||

|

# 1) Ensure NVIDIA base image (skip pull if local)

|

||||||

|

if ! pull_base_image "$base_image"; then

|

||||||

|

# try once more with default if resolution failed

|

||||||

|

if ! pull_base_image "nvidia/cuda:12.2.2-runtime-ubuntu22.04"; then

|

||||||

|

return 1

|

||||||

|

else

|

||||||

|

base_image="nvidia/cuda:12.2.2-runtime-ubuntu22.04"

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

|

# 2) Build latest argus-agent from source

|

||||||

|

echo "\n🛠 Building argus-agent from src/agent"

|

||||||

|

pushd "$root/src/agent" >/dev/null

|

||||||

|

if ! bash scripts/build_binary.sh; then

|

||||||

|

echo "❌ argus-agent build failed" >&2

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

if [[ ! -f "dist/argus-agent" ]]; then

|

||||||

|

echo "❌ argus-agent binary missing after build" >&2

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

popd >/dev/null

|

||||||

|

|

||||||

|

# 3) Inject agent into all-in-one-full plugin and package artifact

|

||||||

|

local aio_root="$root/src/metric/client-plugins/all-in-one-full"

|

||||||

|

local agent_bin_src="$root/src/agent/dist/argus-agent"

|

||||||

|

local agent_bin_dst="$aio_root/plugins/argus-agent/bin/argus-agent"

|

||||||

|

echo "\n📦 Updating all-in-one-full agent binary → $agent_bin_dst"

|

||||||

|

cp -f "$agent_bin_src" "$agent_bin_dst"

|

||||||

|

chmod +x "$agent_bin_dst" || true

|

||||||

|

|

||||||

|

pushd "$aio_root" >/dev/null

|

||||||

|

local prev_version

|

||||||

|

prev_version="$(cat config/VERSION 2>/dev/null || echo "1.0.0")"

|

||||||

|

local use_version="$prev_version"

|

||||||

|

if [[ -n "$client_semver" ]]; then

|

||||||

|

echo "${client_semver}" > config/VERSION

|

||||||

|

use_version="$client_semver"

|

||||||

|

fi

|

||||||

|

echo " Packaging all-in-one-full artifact version: $use_version"

|

||||||

|

if ! bash scripts/package_artifact.sh --force; then

|

||||||

|

echo "❌ package_artifact.sh failed" >&2

|

||||||

|

# restore VERSION if changed

|

||||||

|

if [[ -n "$client_semver" ]]; then echo "$prev_version" > config/VERSION; fi

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

|

||||||

|

local artifact_dir="$aio_root/artifact/$use_version"

|

||||||

|

local artifact_tar

|

||||||

|

artifact_tar="$(ls -1 "$artifact_dir"/argus-metric_*.tar.gz 2>/dev/null | head -n1 || true)"

|

||||||

|

if [[ -z "$artifact_tar" ]]; then

|

||||||

|

echo " No argus-metric_*.tar.gz found; invoking publish_artifact.sh to assemble..."

|

||||||

|

local owner="$(id -u):$(id -g)"

|

||||||

|

if ! bash scripts/publish_artifact.sh "$use_version" --output-dir "$artifact_dir" --owner "$owner"; then

|

||||||

|

echo "❌ publish_artifact.sh failed" >&2

|

||||||

|

if [[ -n "$client_semver" ]]; then echo "$prev_version" > config/VERSION; fi

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

artifact_tar="$(ls -1 "$artifact_dir"/argus-metric_*.tar.gz 2>/dev/null | head -n1 || true)"

|

||||||

|

fi

|

||||||

|

if [[ -z "$artifact_tar" ]]; then

|

||||||

|

echo "❌ artifact tar not found under $artifact_dir" >&2

|

||||||

|

if [[ -n "$client_semver" ]]; then echo "$prev_version" > config/VERSION; fi

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

# restore VERSION if changed (keep filesystem clean)

|

||||||

|

if [[ -n "$client_semver" ]]; then echo "$prev_version" > config/VERSION; fi

|

||||||

|

popd >/dev/null

|

||||||

|

|

||||||

|

# 4) Stage docker build context

|

||||||

|

local bundle_ctx="$root/src/bundle/gpu-node-bundle/.build-$date_tag"

|

||||||

|

echo "\n🧰 Staging docker build context: $bundle_ctx"

|

||||||

|

rm -rf "$bundle_ctx"

|

||||||

|

mkdir -p "$bundle_ctx/bundle" "$bundle_ctx/private"

|

||||||

|

cp "$root/src/bundle/gpu-node-bundle/Dockerfile" "$bundle_ctx/"

|

||||||

|

cp "$root/src/bundle/gpu-node-bundle/node-bootstrap.sh" "$bundle_ctx/"

|

||||||

|

cp "$root/src/bundle/gpu-node-bundle/health-watcher.sh" "$bundle_ctx/"

|

||||||

|

# bundle tar

|

||||||

|

cp "$artifact_tar" "$bundle_ctx/bundle/"

|

||||||

|

# offline fluent-bit assets (optional but useful)

|

||||||

|

if [[ -d "$root/src/log/fluent-bit/build/etc" ]]; then

|

||||||

|

cp -r "$root/src/log/fluent-bit/build/etc" "$bundle_ctx/private/"

|

||||||

|

fi

|

||||||

|

if [[ -d "$root/src/log/fluent-bit/build/packages" ]]; then

|

||||||

|

cp -r "$root/src/log/fluent-bit/build/packages" "$bundle_ctx/private/"

|

||||||

|

fi

|

||||||

|

if [[ -f "$root/src/log/fluent-bit/build/start-fluent-bit.sh" ]]; then

|

||||||

|

cp "$root/src/log/fluent-bit/build/start-fluent-bit.sh" "$bundle_ctx/private/"

|

||||||

|

fi

|

||||||

|

|

||||||

|

# 5) Build the final bundle image (directly from NVIDIA base)

|

||||||

|

local image_tag="argus-sys-metric-test-node-bundle-gpu:${date_tag}"

|

||||||

|

echo "\n🔄 Building GPU Bundle image"

|

||||||

|

if build_image "GPU Bundle" "$bundle_ctx/Dockerfile" "$image_tag" "$bundle_ctx" \

|

||||||

|

--build-arg CUDA_VER="$(echo "$base_image" | sed -E 's#^nvidia/cuda:([0-9]+\.[0-9]+\.[0-9]+)-runtime-ubuntu22\.04$#\1#')" \

|

||||||

|

--build-arg CLIENT_VER="$use_version" \

|

||||||

|

--build-arg BUNDLE_DATE="$date_tag"; then

|

||||||

|

images_built+=("$image_tag")

|

||||||

|

# In non-pkg mode, also tag latest for convenience

|

||||||

|

if [[ "${ARGUS_PKG_BUILD:-0}" != "1" ]]; then

|

||||||

|

docker tag "$image_tag" argus-sys-metric-test-node-bundle-gpu:latest >/dev/null 2>&1 || true

|

||||||

|

fi

|

||||||

|

return 0

|

||||||

|

else

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

}

|

||||||

|

|

||||||

|

# Tag helper: ensure :<date_tag> exists for a list of repos

|

||||||

|

ensure_version_tags() {

|

||||||

|

local date_tag="$1"; shift

|

||||||

|

local repos=("$@")

|

||||||

|

for repo in "${repos[@]}"; do

|

||||||

|

if docker image inspect "$repo:$date_tag" >/dev/null 2>&1; then

|

||||||

|

:

|

||||||

|

elif docker image inspect "$repo:latest" >/dev/null 2>&1; then

|

||||||

|

docker tag "$repo:latest" "$repo:$date_tag" || true

|

||||||

|

else

|

||||||

|

echo "❌ missing image for tagging: $repo (need :latest or :$date_tag)" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

done

|

||||||

|

return 0

|

||||||

|

}

|

||||||

|

|

||||||

|

# Build server package after images are built

|

||||||

|

build_server_pkg_bundle() {

|

||||||

|

local date_tag="$1"

|

||||||

|

if [[ -z "$date_tag" ]]; then

|

||||||

|

echo "❌ server_pkg requires --version YYMMDD" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

local repos=(

|

||||||

|

argus-master argus-elasticsearch argus-kibana \

|

||||||

|

argus-metric-prometheus argus-metric-grafana \

|

||||||

|

argus-alertmanager argus-web-frontend argus-web-proxy

|

||||||

|

)

|

||||||

|

echo "\n🔖 Verifying server images with :$date_tag and collecting digests (Bind/FTP excluded; relying on Docker DNS aliases)"

|

||||||

|

for repo in "${repos[@]}"; do

|

||||||

|

if ! docker image inspect "$repo:$date_tag" >/dev/null 2>&1; then

|

||||||

|

echo "❌ required image missing: $repo:$date_tag (build phase should have produced it)" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

done

|

||||||

|

# Optional: show digests

|

||||||

|

for repo in "${repos[@]}"; do

|

||||||

|

local digest

|

||||||

|

digest=$(docker images --digests --format '{{.Repository}}:{{.Tag}} {{.Digest}}' | awk -v r="$repo:$date_tag" '$1==r{print $2}' | head -n1)

|

||||||

|

printf ' • %s@%s\n' "$repo:$date_tag" "${digest:-<none>}"

|

||||||

|

done

|

||||||

|

echo "\n📦 Building server package via deployment_new/build/make_server_package.sh --version $date_tag"

|

||||||

|

if ! "$root/deployment_new/build/make_server_package.sh" --version "$date_tag"; then

|

||||||

|

echo "❌ make_server_package.sh failed" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

return 0

|

||||||

|

}

|

||||||

|

|

||||||

|

# Build client package: ensure gpu bundle image exists, then package client_gpu

|

||||||

|

build_client_pkg_bundle() {

|

||||||

|

local date_tag="$1"

|

||||||

|

local semver="$2"

|

||||||

|

local cuda="$3"

|

||||||

|

if [[ -z "$date_tag" ]]; then

|

||||||

|

echo "❌ client_pkg requires --version YYMMDD" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

local bundle_tag="argus-sys-metric-test-node-bundle-gpu:${date_tag}"

|

||||||

|

if ! docker image inspect "$bundle_tag" >/dev/null 2>&1; then

|

||||||

|

echo "\n🧩 GPU bundle image $bundle_tag missing; building it first..."

|

||||||

|

ARGUS_PKG_BUILD=1

|

||||||

|

export ARGUS_PKG_BUILD

|

||||||

|

if ! build_gpu_bundle_image "$date_tag" "$cuda" "$semver"; then

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

else

|

||||||

|

echo "\n✅ Using existing GPU bundle image: $bundle_tag"

|

||||||

|

fi

|

||||||

|

echo "\n📦 Building client GPU package via deployment_new/build/make_client_gpu_package.sh --version $date_tag --image $bundle_tag"

|

||||||

|

if ! "$root/deployment_new/build/make_client_gpu_package.sh" --version "$date_tag" --image "$bundle_tag"; then

|

||||||

|

echo "❌ make_client_gpu_package.sh failed" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

return 0

|

||||||

|

}

|

||||||

|

|

||||||

|

# Build CPU bundle image directly FROM ubuntu:22.04 (no intermediate base)

|

||||||

|

build_cpu_bundle_image() {

|

||||||

|

local date_tag="$1" # e.g. 20251113

|

||||||

|

local client_ver_in="$2" # semver like 1.43.0 (optional)

|

||||||

|

local want_tag_latest="$3" # true/false

|

||||||

|

|

||||||

|

if [[ -z "$date_tag" ]]; then

|

||||||

|

echo "❌ cpu_bundle requires --version YYMMDD" >&2

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

|

||||||

|

echo "\n🔧 Preparing one-click CPU bundle build"

|

||||||

|

echo " Base: ubuntu:22.04"

|

||||||

|

echo " Bundle tag: ${date_tag}"

|

||||||

|

|

||||||

|

# 1) Build latest argus-agent from source

|

||||||

|

echo "\n🛠 Building argus-agent from src/agent"

|

||||||

|

pushd "$root/src/agent" >/dev/null

|

||||||

|

if ! bash scripts/build_binary.sh; then

|

||||||

|

echo "❌ argus-agent build failed" >&2

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

if [[ ! -f "dist/argus-agent" ]]; then

|

||||||

|

echo "❌ argus-agent binary missing after build" >&2

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

popd >/dev/null

|

||||||

|

|

||||||

|

# 2) Inject agent into all-in-one-full plugin and package artifact

|

||||||

|

local aio_root="$root/src/metric/client-plugins/all-in-one-full"

|

||||||

|

local agent_bin_src="$root/src/agent/dist/argus-agent"

|

||||||

|

local agent_bin_dst="$aio_root/plugins/argus-agent/bin/argus-agent"

|

||||||

|

echo "\n📦 Updating all-in-one-full agent binary → $agent_bin_dst"

|

||||||

|

cp -f "$agent_bin_src" "$agent_bin_dst"

|

||||||

|

chmod +x "$agent_bin_dst" || true

|

||||||

|

|

||||||

|

pushd "$aio_root" >/dev/null

|

||||||

|

local prev_version use_version

|

||||||

|

prev_version="$(cat config/VERSION 2>/dev/null || echo "1.0.0")"

|

||||||

|

use_version="$prev_version"

|

||||||

|

if [[ -n "$client_ver_in" ]]; then

|

||||||

|

echo "$client_ver_in" > config/VERSION

|

||||||

|

use_version="$client_ver_in"

|

||||||

|

fi

|

||||||

|

echo " Packaging all-in-one-full artifact: version=$use_version"

|

||||||

|

if ! bash scripts/package_artifact.sh --force; then

|

||||||

|

echo "❌ package_artifact.sh failed" >&2

|

||||||

|

[[ -n "$client_ver_in" ]] && echo "$prev_version" > config/VERSION

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

local artifact_dir="$aio_root/artifact/$use_version"

|

||||||

|

local artifact_tar

|

||||||

|

artifact_tar="$(ls -1 "$artifact_dir"/argus-metric_*.tar.gz 2>/dev/null | head -n1 || true)"

|

||||||

|

if [[ -z "$artifact_tar" ]]; then

|

||||||

|

echo " No argus-metric_*.tar.gz found; invoking publish_artifact.sh ..."

|

||||||

|

local owner="$(id -u):$(id -g)"

|

||||||

|

if ! bash scripts/publish_artifact.sh "$use_version" --output-dir "$artifact_dir" --owner "$owner"; then

|

||||||

|

echo "❌ publish_artifact.sh failed" >&2

|

||||||

|

[[ -n "$client_ver_in" ]] && echo "$prev_version" > config/VERSION

|

||||||

|

popd >/dev/null

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

artifact_tar="$(ls -1 "$artifact_dir"/argus-metric_*.tar.gz 2>/dev/null | head -n1 || true)"

|

||||||

|

fi

|

||||||

|

[[ -n "$client_ver_in" ]] && echo "$prev_version" > config/VERSION

|

||||||

|

popd >/dev/null

|

||||||

|

|

||||||

|

# 3) Stage docker build context

|

||||||

|

local bundle_ctx="$root/src/bundle/cpu-node-bundle/.build-$date_tag"

|

||||||

|

echo "\n🧰 Staging docker build context: $bundle_ctx"

|

||||||

|

rm -rf "$bundle_ctx"

|

||||||

|

mkdir -p "$bundle_ctx/bundle" "$bundle_ctx/private"

|

||||||

|

cp "$root/src/bundle/cpu-node-bundle/Dockerfile" "$bundle_ctx/"

|

||||||

|

cp "$root/src/bundle/cpu-node-bundle/node-bootstrap.sh" "$bundle_ctx/"

|

||||||

|

cp "$root/src/bundle/cpu-node-bundle/health-watcher.sh" "$bundle_ctx/"

|

||||||

|

# bundle tar

|

||||||

|

cp "$artifact_tar" "$bundle_ctx/bundle/"

|

||||||

|

# offline fluent-bit assets

|

||||||

|

if [[ -d "$root/src/log/fluent-bit/build/etc" ]]; then

|

||||||

|

cp -r "$root/src/log/fluent-bit/build/etc" "$bundle_ctx/private/"

|

||||||

|

fi

|

||||||

|

if [[ -d "$root/src/log/fluent-bit/build/packages" ]]; then

|

||||||

|

cp -r "$root/src/log/fluent-bit/build/packages" "$bundle_ctx/private/"

|

||||||

|

fi

|

||||||

|

if [[ -f "$root/src/log/fluent-bit/build/start-fluent-bit.sh" ]]; then

|

||||||

|

cp "$root/src/log/fluent-bit/build/start-fluent-bit.sh" "$bundle_ctx/private/"

|

||||||

|

fi

|

||||||

|

|

||||||

|

# 4) Build final bundle image

|

||||||

|

local image_tag="argus-sys-metric-test-node-bundle:${date_tag}"

|

||||||

|

echo "\n🔄 Building CPU Bundle image"

|

||||||

|

if build_image "CPU Bundle" "$bundle_ctx/Dockerfile" "$image_tag" "$bundle_ctx"; then

|

||||||

|

images_built+=("$image_tag")

|

||||||

|

if [[ "$want_tag_latest" == "true" ]]; then

|

||||||

|

docker tag "$image_tag" argus-sys-metric-test-node-bundle:latest >/dev/null 2>&1 || true

|

||||||

|

fi

|

||||||

|

return 0

|

||||||

|

else

|

||||||

|

return 1

|

||||||

|

fi

|

||||||

|

}

|

||||||

|

|

||||||

if [[ "$build_core" == true ]]; then

|

if [[ "$build_core" == true ]]; then

|

||||||

if build_image "Elasticsearch" "src/log/elasticsearch/build/Dockerfile" "argus-elasticsearch:latest"; then

|

if build_image "Elasticsearch" "src/log/elasticsearch/build/Dockerfile" "argus-elasticsearch:${DEFAULT_IMAGE_TAG}"; then

|

||||||

images_built+=("argus-elasticsearch:latest")

|

images_built+=("argus-elasticsearch:${DEFAULT_IMAGE_TAG}")

|

||||||

else

|

else

|

||||||

build_failed=true

|

build_failed=true

|

||||||

fi

|

fi

|

||||||

|

|

||||||

echo ""

|

echo ""

|

||||||

|

|

||||||

if build_image "Kibana" "src/log/kibana/build/Dockerfile" "argus-kibana:latest"; then

|

if build_image "Kibana" "src/log/kibana/build/Dockerfile" "argus-kibana:${DEFAULT_IMAGE_TAG}"; then

|

||||||

images_built+=("argus-kibana:latest")

|

images_built+=("argus-kibana:${DEFAULT_IMAGE_TAG}")

|

||||||

else

|

else

|

||||||

build_failed=true

|

build_failed=true

|

||||||

fi

|

fi

|

||||||

|

|

||||||

echo ""

|

echo ""

|

||||||

|

|

||||||

if build_image "BIND9" "src/bind/build/Dockerfile" "argus-bind9:latest"; then

|

if [[ "$need_bind_image" == true ]]; then

|

||||||

images_built+=("argus-bind9:latest")

|

if build_image "BIND9" "src/bind/build/Dockerfile" "argus-bind9:${DEFAULT_IMAGE_TAG}"; then

|

||||||

else

|

images_built+=("argus-bind9:${DEFAULT_IMAGE_TAG}")

|

||||||

build_failed=true

|

else

|

||||||

|

build_failed=true

|

||||||

|

fi

|

||||||

fi

|

fi

|

||||||

fi

|

fi

|

||||||

|

|

||||||

@ -233,7 +660,7 @@ if [[ "$build_master" == true ]]; then

|

|||||||

echo ""

|

echo ""

|

||||||

echo "🔄 Building Master image..."

|

echo "🔄 Building Master image..."

|

||||||

pushd "$master_root" >/dev/null

|

pushd "$master_root" >/dev/null

|

||||||

master_args=("--tag" "argus-master:latest")

|

master_args=("--tag" "argus-master:${DEFAULT_IMAGE_TAG}")

|

||||||

if [[ "$use_intranet" == true ]]; then

|

if [[ "$use_intranet" == true ]]; then

|

||||||

master_args+=("--intranet")

|

master_args+=("--intranet")

|

||||||

fi

|

fi

|

||||||

@ -247,7 +674,7 @@ if [[ "$build_master" == true ]]; then

|

|||||||

if [[ "$build_master_offline" == true ]]; then

|

if [[ "$build_master_offline" == true ]]; then

|

||||||

images_built+=("argus-master:offline")

|

images_built+=("argus-master:offline")

|

||||||

else

|

else

|

||||||

images_built+=("argus-master:latest")

|

images_built+=("argus-master:${DEFAULT_IMAGE_TAG}")

|

||||||

fi

|

fi

|

||||||

else

|

else

|

||||||

build_failed=true

|

build_failed=true

|

||||||

@ -260,21 +687,27 @@ if [[ "$build_metric" == true ]]; then

|

|||||||

echo "Building Metric module images..."

|

echo "Building Metric module images..."

|

||||||

|

|

||||||

metric_base_images=(

|

metric_base_images=(

|

||||||

"ubuntu:22.04"

|

|

||||||

"ubuntu/prometheus:3-24.04_stable"

|

"ubuntu/prometheus:3-24.04_stable"

|

||||||

"grafana/grafana:11.1.0"

|

"grafana/grafana:11.1.0"

|

||||||

)

|

)

|

||||||

|

|

||||||

|

if [[ "$need_metric_ftp" == true ]]; then

|

||||||

|

metric_base_images+=("ubuntu:22.04")

|

||||||

|

fi

|

||||||

|

|

||||||

for base_image in "${metric_base_images[@]}"; do

|

for base_image in "${metric_base_images[@]}"; do

|

||||||

if ! pull_base_image "$base_image"; then

|

if ! pull_base_image "$base_image"; then

|

||||||

build_failed=true

|

build_failed=true

|

||||||

fi

|

fi

|

||||||

done

|

done

|

||||||

|

|

||||||

metric_builds=(

|

metric_builds=()

|

||||||

"Metric FTP|src/metric/ftp/build/Dockerfile|argus-metric-ftp:latest|src/metric/ftp/build"

|

if [[ "$need_metric_ftp" == true ]]; then

|

||||||

"Metric Prometheus|src/metric/prometheus/build/Dockerfile|argus-metric-prometheus:latest|src/metric/prometheus/build"

|

metric_builds+=("Metric FTP|src/metric/ftp/build/Dockerfile|argus-metric-ftp:${DEFAULT_IMAGE_TAG}|src/metric/ftp/build")

|

||||||

"Metric Grafana|src/metric/grafana/build/Dockerfile|argus-metric-grafana:latest|src/metric/grafana/build"

|

fi

|

||||||

|

metric_builds+=(

|

||||||

|

"Metric Prometheus|src/metric/prometheus/build/Dockerfile|argus-metric-prometheus:${DEFAULT_IMAGE_TAG}|src/metric/prometheus/build"

|

||||||

|

"Metric Grafana|src/metric/grafana/build/Dockerfile|argus-metric-grafana:${DEFAULT_IMAGE_TAG}|src/metric/grafana/build"

|

||||||

)

|

)

|

||||||

|

|

||||||

for build_spec in "${metric_builds[@]}"; do

|

for build_spec in "${metric_builds[@]}"; do

|

||||||

@ -346,8 +779,8 @@ if [[ "$build_web" == true || "$build_alert" == true ]]; then

|

|||||||

|

|

||||||

if [[ "$build_web" == true ]]; then

|

if [[ "$build_web" == true ]]; then

|

||||||

web_builds=(

|

web_builds=(

|

||||||

"Web Frontend|src/web/build_tools/frontend/Dockerfile|argus-web-frontend:latest|."

|

"Web Frontend|src/web/build_tools/frontend/Dockerfile|argus-web-frontend:${DEFAULT_IMAGE_TAG}|."

|

||||||

"Web Proxy|src/web/build_tools/proxy/Dockerfile|argus-web-proxy:latest|."

|

"Web Proxy|src/web/build_tools/proxy/Dockerfile|argus-web-proxy:${DEFAULT_IMAGE_TAG}|."

|

||||||

)

|

)

|

||||||

for build_spec in "${web_builds[@]}"; do

|

for build_spec in "${web_builds[@]}"; do

|

||||||

IFS='|' read -r image_label dockerfile_path image_tag build_context <<< "$build_spec"

|

IFS='|' read -r image_label dockerfile_path image_tag build_context <<< "$build_spec"

|

||||||

@ -362,7 +795,7 @@ if [[ "$build_web" == true || "$build_alert" == true ]]; then

|

|||||||

|

|

||||||

if [[ "$build_alert" == true ]]; then

|

if [[ "$build_alert" == true ]]; then

|

||||||

alert_builds=(

|

alert_builds=(

|

||||||

"Alertmanager|src/alert/alertmanager/build/Dockerfile|argus-alertmanager:latest|."

|

"Alertmanager|src/alert/alertmanager/build/Dockerfile|argus-alertmanager:${DEFAULT_IMAGE_TAG}|."

|

||||||

)

|

)

|

||||||

for build_spec in "${alert_builds[@]}"; do

|

for build_spec in "${alert_builds[@]}"; do

|

||||||

IFS='|' read -r image_label dockerfile_path image_tag build_context <<< "$build_spec"

|

IFS='|' read -r image_label dockerfile_path image_tag build_context <<< "$build_spec"

|

||||||

@ -376,6 +809,49 @@ if [[ "$build_web" == true || "$build_alert" == true ]]; then

|

|||||||

fi

|

fi

|

||||||

fi

|

fi

|

||||||

|

|

||||||

|

# =======================================

|

||||||

|

# One-click GPU bundle (direct NVIDIA base)

|

||||||

|

# =======================================

|

||||||

|

|

||||||

|

if [[ "$build_gpu_bundle" == true ]]; then

|

||||||

|

echo ""

|

||||||

|

echo "Building one-click GPU bundle image..."

|

||||||

|

if ! build_gpu_bundle_image "$bundle_date" "$cuda_ver" "$client_semver"; then

|

||||||

|

build_failed=true

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

|

# =======================================

|

||||||

|

# One-click CPU bundle (from ubuntu:22.04)

|

||||||

|

# =======================================

|

||||||

|

if [[ "$build_cpu_bundle" == true ]]; then

|

||||||

|

echo ""

|

||||||

|

echo "Building one-click CPU bundle image..."

|

||||||

|

if ! build_cpu_bundle_image "${bundle_date}" "${client_semver}" "${tag_latest}"; then

|

||||||

|

build_failed=true

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

|

# =======================================

|

||||||

|

# One-click Server/Client packaging

|

||||||

|

# =======================================

|

||||||

|

|

||||||

|

if [[ "$build_server_pkg" == true ]]; then

|

||||||

|

echo ""

|

||||||

|

echo "🧳 Building one-click Server package..."

|

||||||

|

if ! build_server_pkg_bundle "${bundle_date}"; then

|

||||||

|

build_failed=true

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

|

if [[ "$build_client_pkg" == true ]]; then

|

||||||

|

echo ""

|

||||||

|

echo "🧳 Building one-click Client-GPU package..."

|

||||||

|

if ! build_client_pkg_bundle "${bundle_date}" "${client_semver}" "${cuda_ver}"; then

|

||||||

|

build_failed=true

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

echo "======================================="

|

echo "======================================="

|

||||||

echo "📦 Build Summary"

|

echo "📦 Build Summary"

|

||||||

echo "======================================="

|

echo "======================================="

|

||||||

|

|||||||

6

configs/build_user.pkg.conf

Normal file

6

configs/build_user.pkg.conf

Normal file

@ -0,0 +1,6 @@

|

|||||||

|

# Default build-time UID/GID for Argus images

|

||||||

|

# Override by creating configs/build_user.local.conf with the same format.

|

||||||

|

# Syntax: KEY=VALUE, supports UID/GID only. Whitespace and lines starting with # are ignored.

|

||||||

|

|

||||||

|

UID=2133

|

||||||

|

GID=2015

|

||||||

1

deployment_new/.gitignore

vendored

Normal file

1

deployment_new/.gitignore

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

artifact/

|

||||||

14

deployment_new/README.md

Normal file

14

deployment_new/README.md

Normal file

@ -0,0 +1,14 @@

|

|||||||

|

# deployment_new

|

||||||

|

|

||||||

|

本目录用于新的部署打包与交付实现(不影响既有 `deployment/`)。

|

||||||

|

|

||||||

|

里程碑 M1(当前实现)

|

||||||

|

- `build/make_server_package.sh`:生成 Server 包(逐服务镜像 tar.gz、compose、.env.example、docs、private 骨架、manifest/checksums、打包 tar.gz)。

|

||||||

|

- `build/make_client_gpu_package.sh`:生成 Client‑GPU 包(GPU bundle 镜像 tar.gz、busybox.tar、compose、.env.example、docs、private 骨架、manifest/checksums、打包 tar.gz)。

|

||||||

|

|

||||||

|

模板

|

||||||

|

- `templates/server/compose/docker-compose.yml`:部署专用,镜像默认使用 `:${PKG_VERSION}` 版本 tag,可通过 `.env` 覆盖。

|

||||||

|

- `templates/client_gpu/compose/docker-compose.yml`:GPU 节点专用,使用 `:${PKG_VERSION}` 版本 tag。

|

||||||

|

|

||||||

|

注意:M1 仅产出安装包,不包含安装脚本落地;安装/运维脚本将在 M2 落地并纳入包内。

|

||||||

|

|

||||||

33

deployment_new/build/common.sh

Normal file

33

deployment_new/build/common.sh

Normal file

@ -0,0 +1,33 @@

|

|||||||

|

#!/usr/bin/env bash

|

||||||

|

set -euo pipefail

|

||||||

|

|

||||||

|

log() { echo -e "\033[0;34m[INFO]\033[0m $*"; }

|

||||||

|

warn() { echo -e "\033[1;33m[WARN]\033[0m $*"; }

|

||||||

|

err() { echo -e "\033[0;31m[ERR ]\033[0m $*" >&2; }

|

||||||

|

|

||||||

|

require_cmd() {

|

||||||

|

local miss=0

|

||||||

|

for c in "$@"; do

|

||||||

|

if ! command -v "$c" >/dev/null 2>&1; then err "missing command: $c"; miss=1; fi

|

||||||

|

done

|

||||||

|

[[ $miss -eq 0 ]]

|

||||||

|

}

|

||||||

|

|

||||||

|

today_version() { date +%Y%m%d; }

|

||||||

|

|

||||||

|

checksum_dir() {

|

||||||

|

local dir="$1"; local out="$2"; : > "$out";

|

||||||

|

(cd "$dir" && find . -type f -print0 | sort -z | xargs -0 sha256sum) >> "$out"

|

||||||

|

}

|

||||||

|

|

||||||

|

make_dir() { mkdir -p "$1"; }

|

||||||

|

|

||||||

|

copy_tree() {

|

||||||

|

local src="$1" dst="$2"; rsync -a --delete "$src/" "$dst/" 2>/dev/null || cp -r "$src/." "$dst/";

|

||||||

|

}

|

||||||

|

|

||||||

|

gen_manifest() {

|

||||||

|

local root="$1"; local out="$2"; : > "$out";

|

||||||

|

(cd "$root" && find . -maxdepth 4 -type f -printf "%p\n" | sort) >> "$out"

|

||||||

|

}

|

||||||

|

|

||||||

131

deployment_new/build/make_client_gpu_package.sh

Executable file

131

deployment_new/build/make_client_gpu_package.sh

Executable file

@ -0,0 +1,131 @@

|

|||||||

|

#!/usr/bin/env bash

|

||||||

|

set -euo pipefail

|

||||||

|

|

||||||

|

# Make client GPU package (versioned gpu bundle image, compose, env, docs, busybox)

|

||||||

|

|

||||||

|

ROOT_DIR="$(cd "$(dirname "${BASH_SOURCE[0]}")/../.." && pwd)"

|

||||||

|

TEMPL_DIR="$ROOT_DIR/deployment_new/templates/client_gpu"

|

||||||

|

ART_ROOT="$ROOT_DIR/deployment_new/artifact/client_gpu"

|

||||||

|

|

||||||

|

# Use deployment_new local common helpers

|

||||||

|

COMMON_SH="$ROOT_DIR/deployment_new/build/common.sh"

|

||||||

|

. "$COMMON_SH"

|

||||||

|

|

||||||

|

usage(){ cat <<EOF

|

||||||

|

Build Client-GPU Package (deployment_new)

|

||||||

|

|

||||||

|

Usage: $(basename "$0") --version YYYYMMDD [--image IMAGE[:TAG]]

|

||||||

|

|

||||||

|

Defaults:

|

||||||

|

image = argus-sys-metric-test-node-bundle-gpu:latest

|

||||||

|

|

||||||

|

Outputs: deployment_new/artifact/client_gpu/<YYYYMMDD>/ and client_gpu_YYYYMMDD.tar.gz

|

||||||

|

EOF

|

||||||

|

}

|

||||||

|

|

||||||

|

VERSION=""

|

||||||

|

IMAGE="argus-sys-metric-test-node-bundle-gpu:latest"

|

||||||

|

while [[ $# -gt 0 ]]; do

|

||||||

|

case "$1" in

|

||||||

|

--version) VERSION="$2"; shift 2;;

|

||||||

|

--image) IMAGE="$2"; shift 2;;

|

||||||

|

-h|--help) usage; exit 0;;

|

||||||

|

*) err "unknown arg: $1"; usage; exit 1;;

|

||||||

|

esac

|

||||||

|

done

|

||||||

|

if [[ -z "$VERSION" ]]; then VERSION="$(today_version)"; fi

|

||||||

|

|

||||||

|

require_cmd docker tar gzip

|

||||||

|

|

||||||

|

STAGE="$(mktemp -d)"; trap 'rm -rf "$STAGE"' EXIT

|

||||||

|

PKG_DIR="$ART_ROOT/$VERSION"

|

||||||

|

mkdir -p "$PKG_DIR" "$STAGE/images" "$STAGE/compose" "$STAGE/docs" "$STAGE/scripts" "$STAGE/private/argus"

|

||||||

|

|

||||||

|

# 1) Save GPU bundle image with version tag

|

||||||

|

if ! docker image inspect "$IMAGE" >/dev/null 2>&1; then

|

||||||

|

err "missing image: $IMAGE"; exit 1; fi

|

||||||

|

|

||||||

|

REPO="${IMAGE%%:*}"; TAG_VER="$REPO:$VERSION"

|

||||||

|

docker tag "$IMAGE" "$TAG_VER"

|

||||||

|

out_tar="$STAGE/images/${REPO//\//-}-$VERSION.tar"

|

||||||

|

docker save -o "$out_tar" "$TAG_VER"

|

||||||

|

gzip -f "$out_tar"

|

||||||

|

|

||||||

|

# 2) Busybox tar for connectivity/overlay warmup (prefer local template; fallback to docker save)

|

||||||

|

BB_SRC="$TEMPL_DIR/images/busybox.tar"

|

||||||

|

if [[ -f "$BB_SRC" ]]; then

|

||||||

|

cp "$BB_SRC" "$STAGE/images/busybox.tar"

|

||||||

|

else

|

||||||

|

if docker image inspect busybox:latest >/dev/null 2>&1 || docker pull busybox:latest >/dev/null 2>&1; then

|

||||||

|

docker save -o "$STAGE/images/busybox.tar" busybox:latest

|

||||||

|

log "Included busybox from local docker daemon"

|

||||||

|

else

|

||||||

|

warn "busybox image not found and cannot pull; skipping busybox.tar"

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

|

# 3) Compose + env template and docs/scripts from templates

|

||||||

|

cp "$TEMPL_DIR/compose/docker-compose.yml" "$STAGE/compose/docker-compose.yml"

|

||||||

|

ENV_EX="$STAGE/compose/.env.example"

|

||||||

|

cat >"$ENV_EX" <<EOF

|

||||||

|

# Generated by make_client_gpu_package.sh

|

||||||

|

PKG_VERSION=$VERSION

|

||||||

|

|

||||||

|

NODE_GPU_BUNDLE_IMAGE_TAG=${REPO}:${VERSION}

|

||||||

|

|

||||||

|

# Compose project name (isolation from server stack)

|

||||||

|

COMPOSE_PROJECT_NAME=argus-client

|

||||||

|

|

||||||

|

# Required (no defaults). Must be filled before install.

|

||||||

|

AGENT_ENV=

|

||||||

|

AGENT_USER=

|

||||||

|

AGENT_INSTANCE=

|

||||||

|

GPU_NODE_HOSTNAME=

|

||||||

|

|

||||||

|

# Overlay network (should match server包 overlay)

|

||||||

|

ARGUS_OVERLAY_NET=argus-sys-net

|

||||||

|

|

||||||

|

# From cluster-info.env (server package output)

|

||||||

|

SWARM_MANAGER_ADDR=

|

||||||

|

SWARM_JOIN_TOKEN_WORKER=

|

||||||

|

SWARM_JOIN_TOKEN_MANAGER=

|

||||||

|

EOF

|

||||||

|

|

||||||

|

# 4) Docs from deployment_new templates

|

||||||

|

CLIENT_DOC_SRC="$TEMPL_DIR/docs"

|

||||||

|

if [[ -d "$CLIENT_DOC_SRC" ]]; then

|

||||||

|

rsync -a "$CLIENT_DOC_SRC/" "$STAGE/docs/" >/dev/null 2>&1 || cp -r "$CLIENT_DOC_SRC/." "$STAGE/docs/"

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Placeholder scripts (will be implemented in M2)

|

||||||

|

cat >"$STAGE/scripts/README.md" <<'EOF'

|

||||||

|

# Client-GPU Scripts (Placeholder)

|

||||||

|

|

||||||

|

本目录将在 M2 引入:

|

||||||

|

- config.sh / install.sh

|

||||||

|

|

||||||

|

当前为占位,便于包结构审阅。

|

||||||

|

EOF

|

||||||

|

|

||||||

|

# 5) Scripts (from deployment_new templates) and Private skeleton

|

||||||

|

SCRIPTS_SRC="$TEMPL_DIR/scripts"

|

||||||

|

if [[ -d "$SCRIPTS_SRC" ]]; then

|

||||||

|

rsync -a "$SCRIPTS_SRC/" "$STAGE/scripts/" >/dev/null 2>&1 || cp -r "$SCRIPTS_SRC/." "$STAGE/scripts/"

|

||||||

|

find "$STAGE/scripts" -type f -name '*.sh' -exec chmod +x {} + 2>/dev/null || true

|

||||||

|

fi

|

||||||

|

mkdir -p "$STAGE/private/argus/agent"

|

||||||

|

|

||||||

|

# 6) Manifest & checksums

|

||||||

|

gen_manifest "$STAGE" "$STAGE/manifest.txt"

|

||||||

|

checksum_dir "$STAGE" "$STAGE/checksums.txt"

|

||||||

|

|

||||||

|

# 7) Move to artifact dir and pack

|

||||||

|

mkdir -p "$PKG_DIR"

|

||||||

|

rsync -a "$STAGE/" "$PKG_DIR/" >/dev/null 2>&1 || cp -r "$STAGE/." "$PKG_DIR/"

|

||||||

|

|

||||||

|

OUT_TAR_DIR="$(dirname "$PKG_DIR")"

|

||||||

|

OUT_TAR="$OUT_TAR_DIR/client_gpu_${VERSION}.tar.gz"

|

||||||

|

log "Creating tarball: $OUT_TAR"

|

||||||

|

(cd "$PKG_DIR/.." && tar -czf "$OUT_TAR" "$(basename "$PKG_DIR")")

|

||||||

|

log "Client-GPU package ready: $PKG_DIR"

|

||||||

|

echo "$OUT_TAR"

|

||||||

160

deployment_new/build/make_server_package.sh

Executable file

160

deployment_new/build/make_server_package.sh

Executable file

@ -0,0 +1,160 @@

|

|||||||

|

#!/usr/bin/env bash

|

||||||

|

set -euo pipefail

|

||||||

|

|

||||||

|

# Make server deployment package (versioned, per-image tars, full compose, docs, skeleton)

|

||||||

|

|

||||||

|

ROOT_DIR="$(cd "$(dirname "${BASH_SOURCE[0]}")/../.." && pwd)"

|

||||||

|

TEMPL_DIR="$ROOT_DIR/deployment_new/templates/server"

|

||||||

|

ART_ROOT="$ROOT_DIR/deployment_new/artifact/server"

|

||||||

|

|

||||||

|

# Use deployment_new local common helpers

|

||||||

|

COMMON_SH="$ROOT_DIR/deployment_new/build/common.sh"

|

||||||

|

. "$COMMON_SH"

|

||||||

|

|

||||||

|

usage(){ cat <<EOF

|

||||||

|

Build Server Deployment Package (deployment_new)

|

||||||

|

|

||||||

|

Usage: $(basename "$0") --version YYYYMMDD

|

||||||

|

|

||||||

|

Outputs: deployment_new/artifact/server/<YYYYMMDD>/ and server_YYYYMMDD.tar.gz

|

||||||

|

EOF

|

||||||

|

}

|

||||||

|

|

||||||

|

VERSION=""

|

||||||

|

while [[ $# -gt 0 ]]; do

|

||||||

|

case "$1" in

|

||||||

|

--version) VERSION="$2"; shift 2;;

|

||||||

|

-h|--help) usage; exit 0;;

|

||||||

|

*) err "unknown arg: $1"; usage; exit 1;;

|

||||||

|

esac

|

||||||

|

done

|

||||||

|

if [[ -z "$VERSION" ]]; then VERSION="$(today_version)"; fi

|

||||||

|

|

||||||

|

require_cmd docker tar gzip awk sed

|

||||||

|

|

||||||

|

IMAGES=(

|

||||||

|

argus-master

|

||||||

|

argus-elasticsearch

|

||||||

|

argus-kibana

|

||||||

|

argus-metric-prometheus

|

||||||

|

argus-metric-grafana

|

||||||

|

argus-alertmanager

|

||||||

|

argus-web-frontend

|

||||||

|

argus-web-proxy

|

||||||

|

)

|

||||||

|

|

||||||

|

STAGE="$(mktemp -d)"; trap 'rm -rf "$STAGE"' EXIT

|

||||||

|

PKG_DIR="$ART_ROOT/$VERSION"

|

||||||

|

mkdir -p "$PKG_DIR" "$STAGE/images" "$STAGE/compose" "$STAGE/docs" "$STAGE/scripts" "$STAGE/private/argus"

|

||||||

|

|

||||||

|

# 1) Save per-image tars with version tag

|

||||||

|

log "Tagging and saving images (version=$VERSION)"

|

||||||

|

for repo in "${IMAGES[@]}"; do

|

||||||

|

if ! docker image inspect "$repo:latest" >/dev/null 2>&1 && ! docker image inspect "$repo:$VERSION" >/dev/null 2>&1; then

|

||||||

|

err "missing image: $repo (need :latest or :$VERSION)"; exit 1; fi

|

||||||

|

if docker image inspect "$repo:$VERSION" >/dev/null 2>&1; then

|

||||||

|

tag="$repo:$VERSION"

|

||||||

|

else

|

||||||

|

docker tag "$repo:latest" "$repo:$VERSION"

|

||||||

|

tag="$repo:$VERSION"

|

||||||

|

fi

|

||||||

|

out_tar="$STAGE/images/${repo//\//-}-$VERSION.tar"

|

||||||

|

docker save -o "$out_tar" "$tag"

|

||||||

|

gzip -f "$out_tar"

|

||||||

|

done

|

||||||

|

|

||||||

|

# 2) Compose + env template

|

||||||

|

cp "$TEMPL_DIR/compose/docker-compose.yml" "$STAGE/compose/docker-compose.yml"

|

||||||

|

ENV_EX="$STAGE/compose/.env.example"

|

||||||

|

cat >"$ENV_EX" <<EOF

|

||||||

|

# Generated by make_server_package.sh

|

||||||

|

PKG_VERSION=$VERSION

|

||||||

|

|

||||||

|

# Image tags (can be overridden). Default to versioned tags

|

||||||

|

MASTER_IMAGE_TAG=argus-master:

|

||||||

|

ES_IMAGE_TAG=argus-elasticsearch:

|

||||||

|

KIBANA_IMAGE_TAG=argus-kibana:

|

||||||

|

PROM_IMAGE_TAG=argus-metric-prometheus:

|

||||||

|

GRAFANA_IMAGE_TAG=argus-metric-grafana:

|

||||||

|

ALERT_IMAGE_TAG=argus-alertmanager:

|

||||||

|

FRONT_IMAGE_TAG=argus-web-frontend:

|

||||||

|

WEB_PROXY_IMAGE_TAG=argus-web-proxy:

|

||||||

|

EOF

|

||||||

|

sed -i "s#:\$#:${VERSION}#g" "$ENV_EX"

|

||||||

|

|

||||||

|